Embedded Vision everywhere?

Ask the Experts: Smarter Vision with Embedded Vision

Everyone is talking about embedded vision. But what is embedded vision? A panel discussion at the last VISION fair – organisezed by the VDMA IBV – tried to answer this question and others, like will embedded vision make machine vision more straight forward, successful, or maybe cheaper?

What is Embedded Vision?

„If we get the same reliability we know from the basic vision things than embedded vision could be a good deal.“ – Andreas Behrens, Sick AG(Bild: Sick AG)

Arndt Bake (Basler): We define it based on the process, that people are using. So we differentiate between PC vision, with a PC/Intel architecture, and if you use another architecture – like ARM processors – we call it embedded vision.

Olaf Munkelt (MVTec): There are several important factors. One is the form factor, devices get much smaller. Number two is low power consumption. We think customers deserve the same power in terms of software on an embedded system than they can enjoy on a PC system.

Carsten Strampe (Imago): Our focus is to integrate all the interfaces needed for machine vision and all the processing power near and inside the machine. Therefore we support x86 platforms with Windows Embedded, but nowadays also ARM processors.

Maurice van der Aa (Advantech): Advantech’s point of view, as Global market leader for IPC’s, is the focus on x86 based architecture to support the Embedded Vision market. With x86 technology combined with other architectures, like FPGA and/or GPU, we cover a wide range of the Embedded Vision market.

Richard York (ARM): For us embedded vision is more of a transformation. Taking technologies from other markets, such as mobile or automotive, and bringing it into what is traditionally a world that is used to embedded PCs. Those technologies are typically in an order of magnitude lower by costs, they are an order of magnitude lower by power and there is a much greater ecosystem around them.

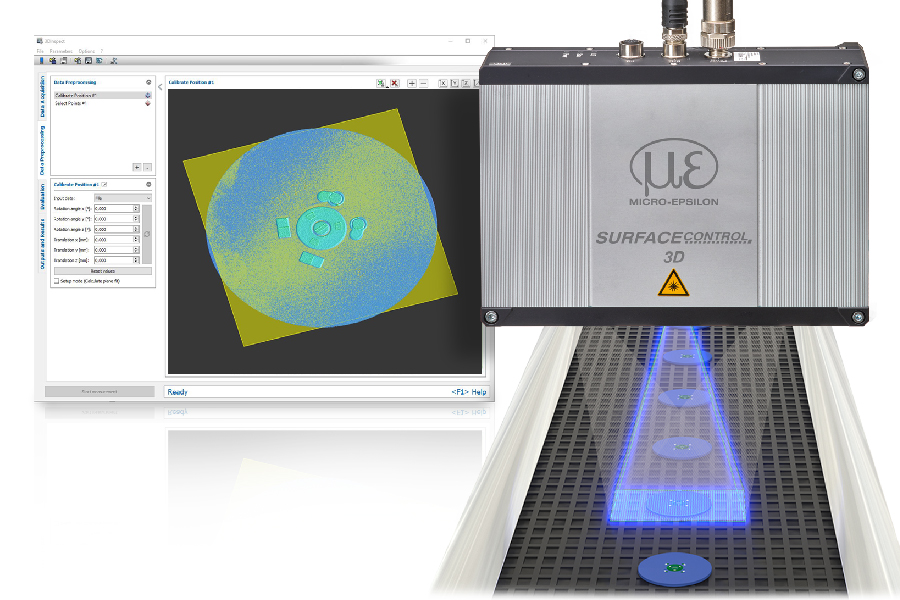

Andreas Behrens (Sick): Sick is a sensor company that knows how to deal with sensors in an embedded vision world. Make it smart and combine it with some clever ecosystem so that you can embed these sensors easily into your machine.

Why will Embedded Vision change machine vision?

„With the embedded vision cost point machine vision can break into markets, where the technology was previously far too expensive.“ – Arndt Bake, Basler AG (Bild: Basler AG)

Bake: If you look at the hardware costs of a vision system today, there is quite a considerable part, people have to pay for the processing architecture. If you exchange the processing (Intel-based) architecture with an embedded architecture, you can reduce the costs quite dramatically. Therefore vision will not stay in the factory any more. With this cost point it can break into markets, where the technology was previously far too expensive.

Strampe: We see more powerful devices inside of the machine near the application, systems with lower power and more intelligent industrial interfaces.

Munkelt: First of all it is a transformation process, which we see right now. It opens up new opportunities, new markets and new applications. The question is, how far will the transformation process go? If I look around at this fair, I see many new line scan cameras, delivering 18khz lines and filling up the main memory within a millisecond. So there are certainly many applications we still need to address for standard PC-based solutions. The question is: Where will we be in five years regarding this ratio?

van der Aa: Technology will become cheaper. It is not only about the front end, because you can make the front end very cost-effective, but you still need to be able to process it and this will be done on the back end. I believe in a two-way structure: Cost-effective on the front side and a high performance system with more computing power on the back-end of the application.

York: I was with a company recently doing retail analytics, putting an embedded system into lots of locations around the retail side to understand and monitor what those people on that retail side are doing. That opens up tremendous opportunities way beyond what we see here for technologies. Think of how can we apply that technology to other markets, which are adjacent but substantially higher volume, and can than offer interesting services as well.

Behrens: What is about software and solving applications in an easy way? How to embed it into your machine? I think there is a need to transform it a little bit into a new technology. To make it easy to find ways that integrators can base on a reliable system or sensor. A sensor that you can buy everywhere, and get services. An ecosystem that you can start immediately to use that sensor which is already prepared with a firmware.

„Customers deserve the same power in terms of software in an embedded system than they can enjoy in a PC system.“ – Dr. Olaf Munkelt, MVTec Software GmbH (Bild: MVTec Software GmbH)

Is embedded vision really useful for every machine vision application?

Bake: I think the transition will not be so quick on the factory side. We are looking more at the enabler side, because the new markets, like retail, can not put a PC in those applications. So the cost point becomes the enabler. These new markets will develop the market structure from the supplier point of view for embedded vision technology.

Which new interfaces will be coming for machine vision?

Behrens: We see a clear trend in ideas born in Industrie 4.0 protocols for the slow? loop. I like to decide between a fast loop, where you typically have to process your data and this processed data goes to a computer or whatever. On the other hand you use the data in complete other ways. Therefore new protocols are born, like MQTT, OPC UA and others. You have to dig into a complete other world with big data and cloud services.

Munkelt: The PC itself is some kind of standard, defined by the CPU, the operating system etc. We don’t have this environment at this point in the embedded vision world. So we still see a diverse set of different CPUs. Today we have ARM, ten years ago everybody spoke about TI. On the operating side we talk about Linux, but there are also commercial versions like VxWorks out there. If we simply take an embedded device, we have to admit that the complexity of this device is higher than it is on the PC side. More people are comfortable working with a PC-based solution than there are with an embedded vision solution. There are several discussions to standardize the interface between the sensor and the CPU side. There are efforts that OPC UA will get a companion specification to make it easier to work on the system side. We should look into this matter to avoid different configurations and then the customer is simply ‚wow‘. We need some kind of streamline process to make it easier to apply.

Bake: If you look at the component market on the factory side today, there was a lot of effort on the standardization between the camera and the processor. We need something similar between board-level products, e.g. board-level to chip-level or between sensor-modules and processor-modules. The other side is a more standardized way between the application and the vision system. How do you connect your system to the central data basis?

York: I think there is an area: the software development space. Companies can be enabled to develop on a desktop and then transport it down to a much lower cost embedded system. That would really open up the market, because it enables you to develop seamlessly in a desktop world but then deploy it into the mass market with much lower cost embedded systems.

Strampe: We see more and more manufacture of industrial processors for the industrial market combine ARM cores together with different special interfaces like a dual core plus ARM core for the computing power and an additional risk processor which has to manage a real time Ethernet fieldbus. For example you can integrate your vision system into an Ethercat environment and everything is integrated on one chip running in the Linux OS.

van der Aa: If you look into the future you will probably see a two-step solution: x86 plus FPGA. Probably in the future they will merge the technologies all-in-one, so we will have more powerful and cost-effective chip solutions.

FPGAs are powerful but also complex. Who can use them?

Munkelt: FPGA programming is a mess. If you have 100 people who can program a PC-based system running windows you may have ten people who are comfortable in a Linux environment and you have probably one person, who is comfortable in a FPGA environment. For that purpose we have companies like Silicon Software, which reduce this complexity, because they allow you to use a FPGA. But we see different hardware architectures right now and we need them by the way. The machine vision community has never been able to develop a perfect CPU by itself and I can imagine some architectures, where this would really be of great benefit. We actually need your invention on the CPU and on the FPGA side to provide our own algorithms and to add benefit to the users. In certain applications we need a FPGA, in other applications it is okay to work in an ARM-like architecture.

Strampe: In machine vision and in our hardware we are using a lot of FPGAs but mainly for communication functionality and for functionalities that are fix for a longer time. If it comes to image processing, people like to use C++ codes or libraries and then it becomes more complex and difficult to catch an image processing algorithm and to put that into the FPGA. As we are familiar with Altera FPGAs, we have a look on the combination of Intel and Altera and what will happen there. Meanwhile Altera is more of an Intel company. On the side of Altera we have also SoCs like Xilinx, the combination of multi core ARM processors and FPGA, and Intel says they will use ARM in the future.

Bake: We need to differentiate between factory and embedded vision applications. In a factory application a typical customer is the vision system engineer. He takes the components, plugs them together and has some colleagues engaging in the software and writing the code. These people normally don’t have FPGA experience. There you have a problem if you have a FPGA programming task attempt. If you go to an embedded vision application it is different, because you are dealing with electronic boards, and if there is customization in the electronic boards you talk about electronic integration. People programming ARM devices, connecting memories and also FPGAs are very common in those companies. It is not so common like PC processing, but I think from a technology point it is OK. And if you talk to OEMs on the embedded vision side we find many people able to cope with FPGAs.

York: We are very confident that both Altera and Xilinx, remain faithful to ARM. But I see FPGAs have been wonderful tools for dealing with the connectivity mess you often get in an embedded system. A SoC/FPGA has got large processor capabilities, it looks just like a conventional processor, but you’ve got all that flexibility to put in different I/Os or connectivity, depending on exactly how you want to adapt your product for different markets or interfaces. That is the power of these FPGAs – largely hidden from the end user, but a real boon to system developers that want to cope with the multitude of different markets and different connectivity options but want to have only one physical product.

Will machine vision become more easier with embedded vision?

„I don’t believe that the IPC will decrease only because of embedded vision.“ – Carsten Strampe, Imago Technologies GmbH (Bild: Imago Technologies GmbH)

Munkelt: There are many applications in agriculture or logistics, where you put security and surveillance in and where you potentially would need many vision sensors. If we talk about embedded vision we always have this kind of application system in mind. From a user’s point of view it is much more easier, because he probably does not need to configure it. He just needs to switch it on and then people are counted exactly. Each of these systems is only 30E. But to build it, you still need some time and you still need to spend some effort.

Strampe: I can fully agree. It will take more effort on the application side and become more easier on the user side.

Behrens: That is exactly the viewpoint of a sensor, to encapsulate such a FPGA structure to hide those ARMs from the application developers. He likes to develop an application, not to dig into some FPGA stuff or handling with operating systems. So if you have the functions right in place, you can put all those things together to an ecosystem and give this support to an application programmer.

Which advantages does embedded vision bring along for IPC producers?

Strampe: The answer comes from the market. Some people prefer the Windows operating system, because they have to integrate a complex data base to the image processing application. Other people played with a Raspberry PI board, and are familiar with Linux, they are more attracted to an ARM platform.

Bake: We all are engineers and believe that decisions are made purely on technology, which I don’t think is the case. So why are the Intel based architectures so successful on the factory side? The PC was never built for the factory automation market, because it is far too small. The only reason it was used there is because it was so popular. If now the most popular processors for consumers change over from PC based to embedded based, we can assume what will be the most attractive processor also on our side, if we use the same mechanics.

What about Deep Learing?

„There is absolutely no doubt that machine learning in all of its various forms is going to change the face of this industry completely.“ – Richard York, ARM Ltd. (Bild: ARM Ltd.)

York: There is absolutely no doubt in my mind, that machine learning in all of its various forms is going to change the face of this industry completely. How quickly will it do it? Who knows. But it can not be ignored. The automotive industry is investing billions of Dollars in machine learning for highly automated driving. That is going to have an impact.

Munkelt: Billions of Dollars went into this technology already to differentiate cats, dogs, grandma, grandpa or people. This is what we call an identification technology. Apart from that, which also includes bar code and data code reading, we also have topics like measuring in 1D, 2D and 3D, metric measurement, camera calibration. All this is not part of deep learning. It is one more step in the direction of identifying objects. We should be careful differentiating between training and actually identifying, because the training process in deep learning is very time consuming and very computational expensive. You can parallelize this, but in order to train these nets you typically need days or weeks.

York: The fusion of machine learning and conventional visual algorithms is certainly the key. If you can bring those two domains together successfully, that would be really powerful. I absolutely agree, there is no substitute for many of the fundamental vision algorithms that people have developed over the decades.

Munkelt: MVTec uses deep learning to improve OCR and it has a benefit. It gets us a few more percent of recognition rate, which is critical in a machine vision environment.

van der Aa: But who is going to be the key player: the visual computing appliance technology, the GPU or the FPGA? We see Deep Learning on the back end side. So the embedded vision on the front end is capturing images and the back end side is learning from what is captured. So this part is on the GPU side.

Munkelt: Maybe in two years we will sit here and see that there is another super algorithm and we need another architecture to run it. If we have a new technology which adds value to our existing or new customers we will use it, no matter what it is.

If embedded vision is becoming so fast and cheap, who still needs an IPC?

„Even if there is embedded vision for the preprocessing part, there is always something on the back end side, that requires faster processing.“ – Maurice van der Aa, Advantech (Bild: Advantech Europe BV)

van der Aa: I think there will always be IPCs. Even if there is embedded vision for the preprocessing part, there is always something in the back end side and there are still applications, that require faster processing, maybe a two-step solution with a FPGA or a GPU. IPCs will still exist, maybe in a different form factor, but they will definitely exist.

Strampe: What I see on the IPC side is that engineers have more and more different tasks, all of them realized on the IPC. I don’t believe that the IPC will decrease only because of embedded vision.

Behrens: For Sensor fusion, combining different sensors not only based on vision technology, also using LIDAR sensors, or to combine it simply with an encoder for the driving vehicle you are controlling.

Bake: We compare that to other trends, e.g. with a frame grabber. Once you have technologies, which can take the frame grabber out, they are far more cost effective and are appealing to a large majority of customers. Nevertheless you have a certain group of customers who are performance driven and who stay on the high technology. I think the transition over to embedded with regard to the factory will be slow and will develop first in other markets and then slowly will come back to the factory side and then the gross of embedded technology will be higher than IPCs up to a point.

What do you think will be the markets for embedded vision?

Bake: A very interesting one is retail. You don’t see a general purpose solution there, it is really a single purpose solution.

van der Aa: Transportation, security and surveillance are definitely going to use that technology. If you look on the security side, the cost factor of the camera is always impacting. Security all over the world becomes much more important. If we need to filter the data, that comes from a standard camera, we can do that with an industrial camera.

Behrens: Closer related to identification are Track&Trace and inline inspection. You can use it in automotive and in other similar markets.

What can embedded vision learn from machine vision?

Bake: Embedded solutions come from people who have an experience with electronic design and software design. The whole vision part is missing, the way how to handle light, optics, transform that into an image and then deal with an image. What is interesting is the component type of structure, which has been developed in machine vision, helping customers to combine different sensors with different processors trying to deal with the variety of different applications.

Strampe: They can learn about reliability from machine vision in all aspects, hardware, firmware, libraries, the whole system. Sometimes embedded people start from zero to design an embedded vision system, which is already available in machine vision.

Munkelt: From the algorithm side I would say that there are plenty of algorithms which have proven themselves for many years in the machine vision world. We have developed a very distinct technology over decades, and if they look a little closer at this, they can make a real machine vision application on an embedded system much quicker.

van der Aa: From a system point of view, I think the most important part is Longevity & Revision Control, to provide stable platforms to our partners for a longer period of time.

York: What we want to do is learn broadly from the whole industry about embedded vision and machine vision, so that we can do a much better job of developing what we build, because we are a key enabling factor to a lot of what is going on here.

Behrens: If we get the same reliability we know from the basic vision things than embedded vision could be a good deal. On the other hand if this will not take place, than the customer will decide what is the right way to go on.

Advantech Europe Maurice van der Aa, Product Sales ManagerARM Richard York, VP Embedded Marketing

Basler Arndt Bake, CMO

Imago Technologies Carsten Strampe, Managing Director

MVTec Software Dr. Olaf Munkelt, CEO

Sick Andreas Behrens, Head of Marketing&Sales Barcode-RFID-Vision