How will future cameras differ from today’s cameras?

Torsten Wiesinger, Lucid Vision: The big difference is quite hard to judge. What will never change is that a camera will always have the same task: Providing the user with the best quality images in a reliable way. I personally think we will see bigger differences in the software or the integration in the customers‘ ecosystem.

Dany Longval, Teledyne Lumenera: If we look 20 years back, cameras have not radically changed. Sure, the performance has increased but we have the same core capabilities. So I think we will see a further increase in performance in the near future, because I don’t think we reached our limits in that area.

Sören Böge, Basler: I agree, sometimes the transformation speed feels exetremly high but if you look in the rear view mirror the general task mainly stayed the same. But what you can see is the same trend as in chip development: A reduction of development cycles and growing market fragmentation.

Andreas Waldl, B&R: I think cameras need to become much more intelligent in order to reduce the bandwidth needed for transmission. As sensor sizes keep growing, we need to find smarter approaches than just faster and faster transmission of data-often useless data. This can be done by pre-processing on the camera or directly on the image sensor.

Which functionalities will be moved from an external computer into the camera in the near future?

Waldl: I don’t think topics like reading data codes or detecting objects should come to simple cameras. Those are tasks for dedicated smart cameras like we have in our portfolio. Standard cameras should be able to reduce data to essential information. Pre-processing based on deep learning can help, for example using low-power – but still powerful – inference controllers.

Wiesinger: It depends of course on the power of the back-end system. I cannot imagine that tasks like only QR code reading will be done with one standard camera. For years we’ve been talking about shrinking the PC and the camera together into a small box and we still have customers who wish to do four or five certain applications at once which are not possible today.

Böge: I would disagree to saying that every camera should become a smart camera. If we think about high performance cameras that have multiple Coaxpress channels we see 50GBit/s image streams, where an integrated processing is hard to realize in the camera. Furthermore you will never get the preprocessing transferred from your backend system into your camera because the final systems are so individual and complex. So there will be cameras with included neural networks for example but I’m quite sure there will not be this one-fits-all smart-camera that will cover all applications.

Longval: The first things that will move into the camera are measuring, filtering or applications like object detection. I can see that happening but it will happen in steps. At that point it becomes harder to define what is a camera and what is a smart camera.

Will the camera of the future still be a generic device or will it migrate towards an application specific module?

Longval: Camera design and camera architecture by themselves are becoming more and more flexible. Just about every camera uses FPGAs or a level of buffering. You can currently offer this level of flexibility to your customer. Surely there will always be a good market for simple off-the-shelf cameras but we will see more of these application specific solutions.

Böge: I see some segments where you can find the demand for very application specific solution, for example in the high performance market. But in the high volume segment a 5MP global shutter camera with good image quality can cover such a wide range of applications that you cannot define upfront a specific purpose.

Wiesinger: I agree that the mainstream will stay generic but we see a lot of demand from OEM-customers for something that is easy to integrate into a specific application. So there is a demand for flexibility.

Waldl: I also think that a camera has to be flexible but I also don’t think that the one-size-fits-all solution will be possible. Of course there are some dedicated applications that can’t be solved within the camera but when I speak about preprocessing in the camera I’m talking mostly about data reduction.

Usually we talk about vision users, but what do you think the camera of the future will look like for an automation user?

Waldl: Hopefully not too different. It must of course be robust and easy to integrate with tight synchronization. But these requirements exist elsewhere as well. Easy integration means not only plug-and-play capability, but also ease of handling and service for the end user.

Do you all think that will happen in the near future?

Böge: I think of course the standardization we saw in machine vision is also happening in automation with OPC UA or time-sensitive networking. Many of these standards are also highly interesting for vision. Even though our machine vision cameras do not have Profinet, Ethercat or other standards directly, many of them are connected to a vision system that itself is integrated in such a network. So thinking outside of your own range is very important.

Longval: What I like about automation is that it is well contained so it is possible to introduce new technologies. In vision applications that is a lot harder because there are more factors that are outside of your control, for example in non-traditional applications like traffic. So in cameras in automation you can see these innovations like AI processing or non-visible light applications first.

What role will OPC UA play?

Wiesinger: For most PC-based camera manufacturers it is already part of their development and it might be important for more intelligent cameras in the future. But of course we will not get rid of Profinet, Powerlink or Ethercat yet.

Waldl: As cameras become more intelligent, OPC UA can of course smooth the road from camera to smart camera. And of course with TSN for high-speed synchronization becoming a topic it is very important for us.

Böge: As I elaborated before, if you are connected to a PC with your standard machine vision camera it seems like it is not in your back yard, so to speak. But I’m still convinced you have to be very close to these kinds of trends and technologies and understand them because they might bring those requirements towards you. For example, we integrated the precision time protocol years ago into our GigE-cameras which is similar to TSN and other high speed synchronization protocols. Even if you probably won’t integrate OPC UA into a standard machine vision camera as protocol these underlying requirements have to be looked at very carefully.

What impulses will come from the embedded market for machine vision? What trends do you see?

Böge: It starts with the definition of ‚embedded‘ which is very fuzzy at some point. But definitely the shrinking and higher performance we see on the processing side is also visible in cameras. Of course that does not mean for every embedded processing unit you need a board level camera as well.

Wiesinger: I think in the past deep learning and AI hyped up the embedded market. There were a lot of users from the software side with relatively little knowledge of hardware or camera technology. So the big challenge in this market is to provide more standardization to make it easier for the customers to use the hardware with the software.

Waldl: More power, more flexibility and lower cost go naturally with a robust integrated industrial solution. A low-power but powerful integrated inference controller was unthinkable a few years ago but today there are many suppliers in this field. That’s why inference controllers will be a standard function of any industrial camera in the near future.

Longval: I think we are going to see more technologies coming from the embedded market to our space because we are good at adapting them. GigE for example came from the consumer IT space and we adapted it to our domain. For us it is a good way to bring in new technologies from high volume markets like systems-on-chips.

What role do software and programming tools play in the near future?

Longval: The camera in the old days used to be a sensor, a logic board and a back camera interface of some sort, but it already isn’t like that any more. There is more software in play already, so it’s more of a solution than a camera. And we are going to see more of that because customers want to have the ability to either work with us closely or take what we have and do their own things regarding modules and applications. So to make the software and tools available will be very important.

Wiesinger: You always have to be on top of these new software tools and different operating systems as a camera manufacturer. You also have to be fast to integrate them and make it as easy as possible for your customers who are used to applying these new tools.

Böge: I agree completely, we can already see this fragmentation or high variety on the software side, so you have to integrate Programming Languages like Python or ROS in your SDK and make it a standard. We have been doing it with our Pylon SDK for years now and see that as a key benefit for our customers. This will be even more important in the future with software running on the camera and embedded system in general. You can’t just give your SDK to your customers and let them try to connect it to third party libraries on their own. At least you have to test all these possible combinations and ensure that it feels for customers like coming from a single source.

Waldl: I would just add that many of the newer sensors have features that are often not provided by camera manufacturers. The tools should also provide access to these options.

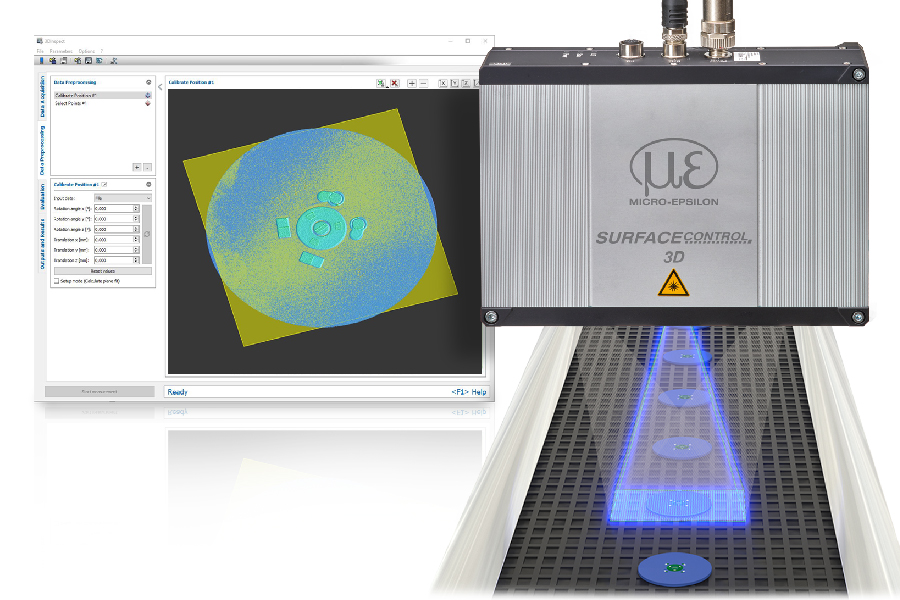

How important will 3D cameras be in the future?

Waldl: I see a big potential for the use of 3D cameras in industrial applications. We are currently evaluating, how to best integrate this technology in our portfolio.

Wiesinger: For us the 3D market plays a significant role already. And looking at the market almost everyone offers some kind of 3D stereo vision or ToF camera. I think 3D won’t replace 2D cameras as the dominant part of the industry but it will continue to grow.

Longval: I think there is room for growth for 3D. We are just at the start of that and we are going to see more as the capabilities are improving. Just because it is so natural to us, we live in 3D and bringing these capabilities to machine viusion will be a key part in the future.

Böge: I agree completely. Sometimes it feels a bit like when we did the first steps in 2D: The cameras are bit clumsy, a little bit bigger, the cost is not quite where customers are expecting it. But I think we are now at a good point in the hype cycle around 3D where people begin to understand what they realistically can do with 3D and how it can be leveraged.

Which image sensor trends are we going to see in the future? Bigger sensors, smaller pixels, more speed?

Longval: We need all of the above. There are so many different applications for vision, one person needs higher speed, another needs higher resolution. Especially the resolution, who would have thought that we have 100+MP sensors just a few years back? But it’s not the end because there is real need for even higher resolution. But it’s not only the sensors, you have to think of the other components like the optics or the interface as well. Again, sensors are important but it’s about the solution as a whole.

Böge: I agree completely, it becomes more and more about a Multi-dimensional portfolio planning as well. Because customers are working on completely different tangents of the vision market spectrum. But in the end you have to serve them if you want to be a full service provider in machine vision. I’m convinced you can’t go for a part of that and will be fine. You have to have smart answers and a broad portfolio to serve all these customers. Because many of the different parts will be combined in a single solution. So the portfolio scaling is definitely one of the most important parts right now.

Wiesinger: Of course the trend of higher, faster, bigger will continue in all directions in the market. We all have seen it with the Sony sensors of the past few years. There is a high interest of getting more technologies like Polarization, HDR, SWIR or even some intelligence into the sensor. I think this can be the trend that a sensor is capable of doing much more different tasks than the standard visible light sensors.

What importance will new imaging modalities like polarization, hyperspectral, SWIR or UV have?

Waldl: For me, SWIR is more important than sensors with 100 MP and more. But we would like to have a wider wavelength range than is available today, and of course these will then be used for hyperspectral applications. However, these sensors will first have to become much cheaper to get into a price range that our customers are willing to pay for their high-volume applications.

Longval: Currently we are able to find a lot of use in multispectral instead of going full blown hyperspectral and can still achieve a lot of the same. In regard to UV we use a coating to achieve some level of UV capabilities in our cameras. So there is definitely a need for these technologies if they allow us to do it in a better way because the applications are there.

Wiesinger: The price is key. In our experience with polarization for example there are verygood applications under development, but most customers are still waiting for lower prices.So it’s still a niche market but I am confident, it will become more important. In the end it’s a question ofcreating the volume and getting the price down, which is happening already.

Böge: Yes, the business case is definitely the issue today. With SWIR I’m convinced that once the price comes down it will enter the mainstream market and have a huge impact there. In ascending order regarding the level of interest I’d say polarization, UV and then SWIR.

How can we ensure short and reliable delivery times for cameras in the future?

Böge: Honestly, if I see big car manufacturers parking their almost finished cars for months because there is one chip missing I don’t think that the machine vision industry will have a better solution to prevent that kind of impact. But I think what will be important is to invest in active supply chain management, second source strategies or obsolescence management. And I’m sure that in the future it will become more important to have a certain size in the market to get your stakes at the table from the suppliers.

Longval: I agree with that, I think in that case size does matter. But I would add that it also matters to manage your customers‘ expectations and then try to do your best to meet these expectations. (bfi)