Routschka: The number of image based inspections are continuously increasing and you won´t have so many vision experts in the future especially in Germany. This should be the main goal to bring AI applications into the production.

Güldner: You need to serve these mega trends and one of them is the shortage of workers and another is of course sustainability. So AI will penetrate more applications to replace workers that we will not have in the future. This is not due the prices, it’s because of the lack of workers.

How to compare AI performance and how to select the best algorithm for my application?

Eckstein: You really have to define the strategic value of AI for your company. Is it a core element of your strategy where you want to add value yourself by offering AI, than you have to do as much as possible by yourself. If you think it is strategic, but just part of your portfolio and not your core product or competency, then you should look for a partner that you can work with over years to improve your products. If you just look for a very quick solution for one machine or application, then you can do this with an in-house AI expert, an open source tool, or an integrator.

Routschka: There is no such thing as the best universal solution. What we must not forget is customer satisfaction, and this depends not only on hard facts, but also on customization options and feel good factors… How good is the best performance if I can’t find my way around in the system because the setup is to complex? Customers often relay on long partnerships with an integrator because there is something beyond the hard facts like trust. That is something which AI needs to build up as well.

Droege: The issue of trust is important, and obviously the overview of AI companies‘ competitors is so scattered. So it’s pretty hard to see through the smoke. We even allow our customers to test the entire AI system for free in the beginning. We define what the system needs to do, how accurate it needs to be and how fast. Then we actually perform against these KPI´s (Key Performance Indicators) and if we don’t achieve them the customer doesn´t pay anything. In this way, we create trust, because if we did this with all customers and never got an order, this business model would be very short term.

What role does synthetic images play in the future?

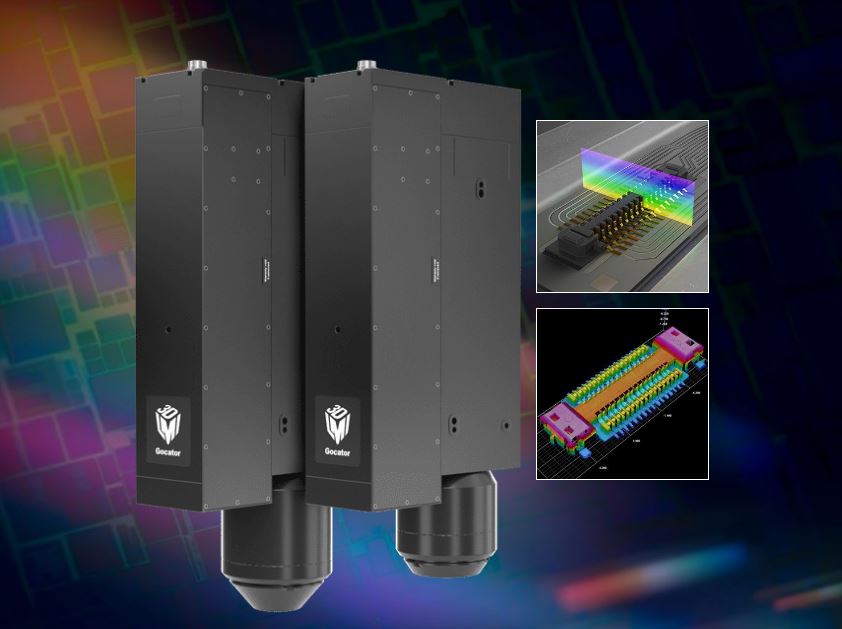

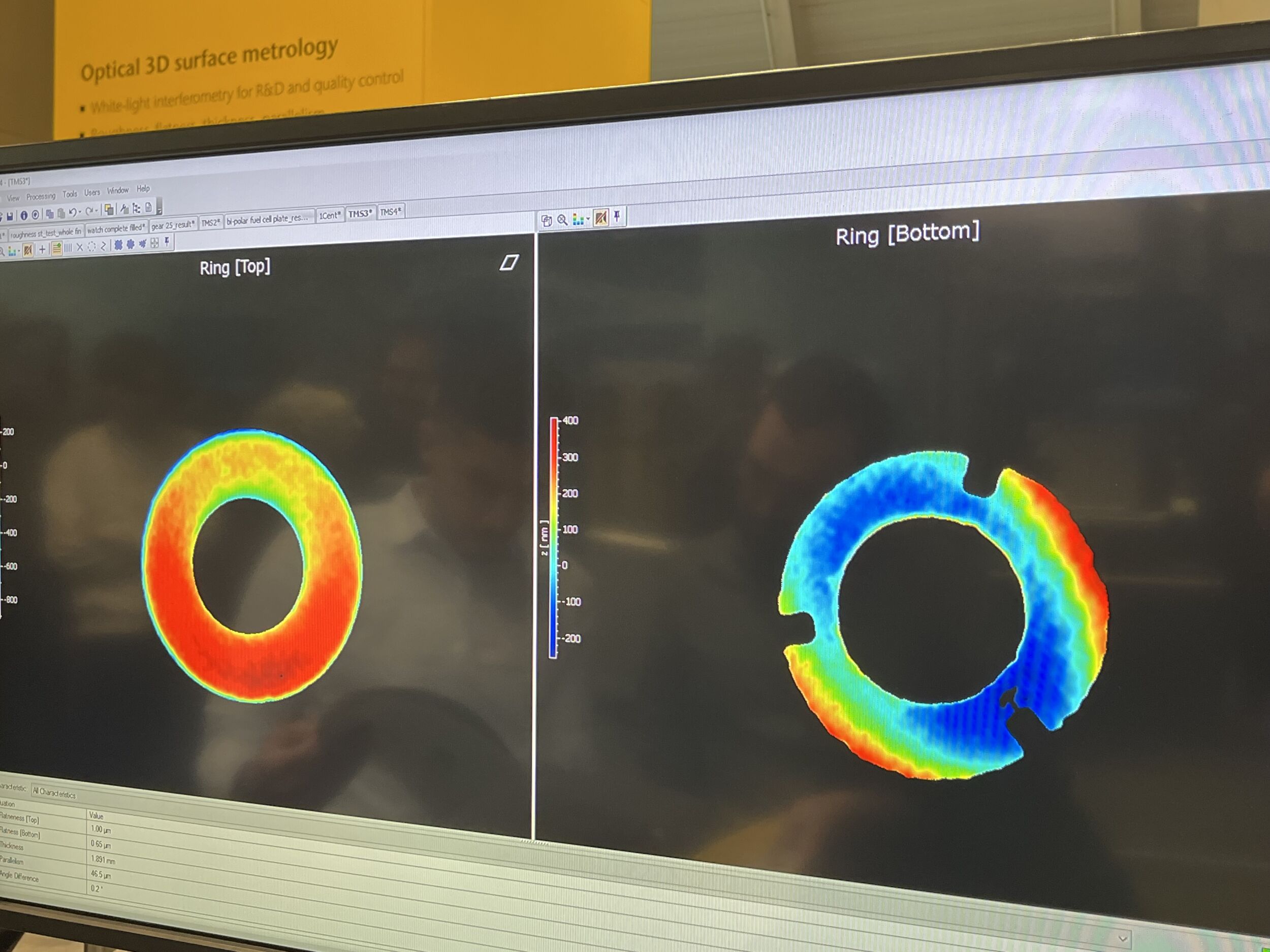

Eckstein: Synthetic data plays a big role. What you can do is increase data size and cover edge cases. To be honest, I had the high point of my personal hype cycle with synthetic images two years ago. Then I was really disappointed. Unfortunately synthetic images look real to a human, but they are not real to the AI. The features are too different to the actual real life examples. For defect detection there is a very limited use and it can help for unsupervised methods where you don’t have any defects at all like in anomaly detection. If you just want some images to test a model you can create some synthetic defects. But it can be very valuable when it comes to 3D and you had a CAD model and generate images from that. Then, you can do 60 positions of one part because real 3D images are very expensive for the training data set and usually have a low quality especially with cheaper 3D sensors. For a pick&place application, synthetic images are a good solution.

Güldner: Synthetic imagines make tremendous progress thanks to AI. At the latest photoshop version you can simply tell by voice ‚pigs in the garden‘ and the image is there. If you want to make a check if AI is working or not, separate the teams and then use different approaches that can actually get rid of this circle reference where you create images and defects and than test if you can detect them.

Droege: Every AI company uses synthetic data generation to some extent, but for defect creation I don’t think, it’s the magic bullet. If you have a generative model, which can create all kinds of defects in all it’s variability you have to solve a task. But you need a model to solve the task to generate training data, and you reuse that task again which by definition is not 100% sensible.

Eckstein: Especially if you use generative models. The theory would be that a model generated from defects would add information to your model.

Droege: We have all seen the hype about large language models, but when you look at large vision models there is not much there. Mostly these models have been tested on internet data and this is very different. There are no open source for industry image data sets with the ideal large variability of different defects. This would help tremendously not only for preparing purposes. You can bring down the number of actual samples that you need and so you can work a similar problem from a different angle.

What is about the scalability of AI systems: Is Copy&Paste possible?

Droege: In some case, it is close to it. We have several systems where we scale them up to several lines, and there are these small differences in rotations of position or lighting. It´s not Copy&Paste, but it’s 95% the same, so the extra work is significantly reduced. Then we have this benefit that a rare error in Mexico, can also teach to a similar system that is standing in Germany, for example.